Publish Overview

Testspace supports publishing large volumes of automated test results with a single command, including code coverage, static analysis, and other important metrics.

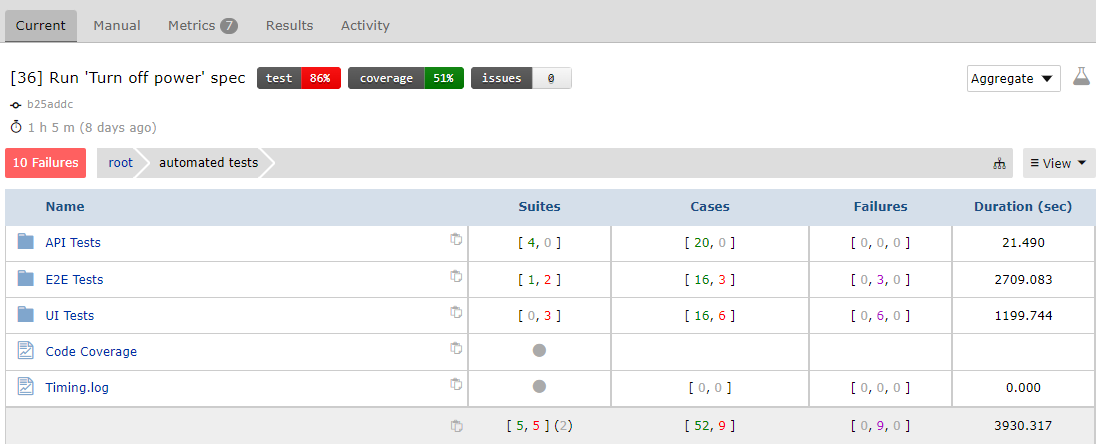

Because of its built-in CI integrations with GitHub, GitLab, and other systems, branching, forks, and pull requests work seamlessly with the developer workflow. Testspace will also automatically aggregate test results from parallel jobs.

Testspace also includes support for in-house proprietary automation systems.

The following content is a partial .github/workflows/ci.yml file for example purposes:

name: CI

on:

push:

jobs:

build:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3

- uses: testspace-com/setup-testspace@v1

with:

domain: ${{ github.repository_owner }}

- name: Do testing stuff

run: your-test-framework --tests

- name: Push result to Testspace server

run: |

testspace ./results/*.xml coverage.xml

if: always()

The following is an overview of the functionality provided by Testspace:

- Supports a

branch-basedworkflow, includingforksandpull requests - Works with CI matrix

- Handles output generated by industry tools (e.g. JUnit, NUnit, TRX, etc.)

- Support for publishing code coverage, and other artifacts (see here for details)

- Provides the ability to publish derived Custom Metrics

- Ability to include additional information such as links to build machines, annotations including logs, etc.

- Can automatically organize your test result content based on folders and your source directory structure

To publish content to the Testspace server simply "push" file(s) with the Testspace client.

testspace path/to/**/results*.xml coverage.xml ..

Once test results have been published, the status of the software, regardless of the testing method, build system, etc., can be monitored by all team members. All the metrics - test results, code coverage, defects, etc., are collected and analyzed.

The Testspace client was designed to support large volumes of content

Once test results have been published; the Testspace Dashboard provides:

- Built-in metrics/graphs

- Extensive Failure Tracking Management

- Automatic Flaky analysis

- Insights for process improvements

Quality can be assessed not only using test results, but with other metrics like code coverage, and custom metrics (i.e. timing, error logs, etc.).